Before we go into what evaluation is, the entire picture would not be complete if we don’t discuss the preliminary process involved in evaluation. The full picture is Monitoring and Evaluation, and we can’t make any evaluation if a project has not been monitored. Here’s what that means:

Monitoring is a planned and routine gathering of data from projects or ongoing programmes for four main purposes:

- To learn from experiences so as to improve future strategies and practices;

- To ensure complete accountability of the resources used and the results which were obtained;

- To ensure that future decisions taken for the initiative are well informed decisions;

- To promote the empowerment of the initiative’s beneficiaries.

Monitoring is a recurring task that is undergone prior to and during a project’s or programme’s undertaking. Monitoring allows us to document results, processes, and experiences to be used as what will guide decision-making and the learning process. Monitoring is where we compare our plans to our progress.

Whereas, evaluation is an objective and systematic assessment of a completed project or programme (it could also be a phase of an ongoing project that has been completed). Evaluations assess data and information that allows those in charge of the project to make strategic decisions, thus improving the progress of the programme in the future.

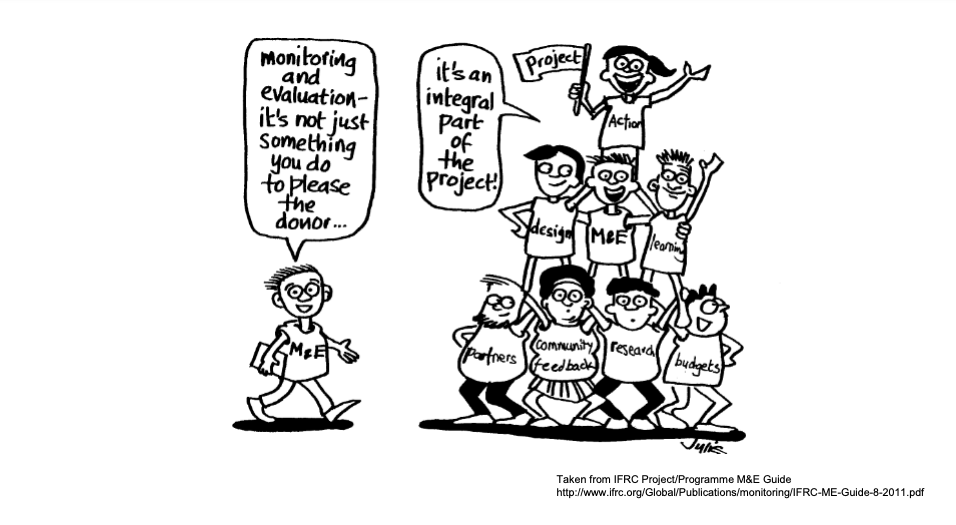

Monitoring & Evaluation

M&E in general should be embedded deeply in the process carried out during any project undertaking, and should not be considered an optional accessory of a programme or project. M&E must not be considered as an imposed instrument of control by donors or investors, as this could cripple our execution of it.

In general, monitoring is integral to evaluation. During an evaluation, information from previous monitoring processes is used to understand the ways in which the project or programme can be developed.

According to SportandDev, Evaluations should help to draw conclusions about five aspects of the project:

- Relevance

- Effectiveness

- Efficiency

- Impact

- Sustainability

Information gathered during monitoring should be tailored towards these aspects to later be evaluated.

Evaluation Theories

There are several theories that have been propounded, on which evaluation practice builds its foundation from. According to American University Online, a few of these theories are:

Use Focused Evaluation Theory

Michael Quinn Patton, PhD, created Utilization-Focused Evaluation (UFE) on the reason that “assessments ought to be decided by their utility and real use” (Patton, 2013) . This model is an hypothetical one which should be applied when instrumental use is the ultimate objective. UFE centers around observing usage by the essential clients or participants in your project. The evaluator includes expected clients at each phase of the interaction, helping them see their responsibility for the success of the assessment. Patton endorses a 17 stage process for working with UFE overall.

Values Engaged Evaluation Theory

Jennifer Greene, PhD, created Values Engaged Evaluation (VEE) as a majority rule approach that is exceptionally receptive to setting and stresses partner esteems. VEE tries to give contextualized understandings of social projects that have specific guarantees for underserved and underrepresented populaces (Greene, 2011). It is thought of as a “majority rule” approach since it urges the evaluator to incorporate all important partner esteems. VEE is worried about nothing wide and top to bottom inquiries, and is more appropriate for developmental rather than summative assessments.

Strengthening Evaluation Theory

David Fetterman, PhD, created Empowerment Evaluation as a way to encourage program improvement through strengthening and self-assurance (Fetterman, 2012). Self-assurance hypothesis depicts a singular’s office to outline his or own course throughout everyday life and the capacity to recognize and communicate needs. Fetterman accepts the evaluator’s job is to enable partners to take responsibility for the assessment process as a vehicle for self-assurance. Strengthening assessment looks to build the likelihood of program accomplishment by giving partners the apparatuses and abilities to self-assess and standard assessment inside their association. Fetterman diagrams three fundamental stages for directing strengthening assessment: (1) Develop and refine the “mission,” (2) assess the situation and focus on the program’s exercises, and (3) plan for what’s to come.

Hypothesis Driven Evaluation Theory

Huey Chen, PhD, is one of the primary supporters of Theory-Driven Evaluation. Chen perceives that projects exist in an open framework, consisting of data sources, results, and effects. He recommends that evaluators should begin by working with partners to comprehend the suppositions and planned rationale behind the program. A rationale model can be utilized to show the causal connections between exercises carried out and results achieved. Evaluators ought to think about utilizing this methodology when working with program implementers to create significant data for developmental program improvement.

Types of Evaluation

There are various kinds of evaluation as we’ll discuss below. Sometimes, the differentiation is self-evident, for instance, between self-assessments and formally appointed assessments. Sometimes, the difference lies in the type of evaluative technique used.

You will get various outcomes when you pick different research strategies. Because of this, you ought to know about the methodology you are taking as it will influence the manner in which you gather information and the assessment you eventually make.

According to TheToolKit, there are a few unique kinds of assessment:

- Process Evaluation: This method involves understanding the programme’s execution process and is regularly done from the beginning of a project to realize the reason why expected results are not being accomplished or when there are explicit worries about how the operation is going.

- Impact Evaluation: They are carried out to find the causal impacts of an intercession, including any accidental impacts, positive or negative, either on the designated recipients or others. Such assessments are expensive to carry out.

- Thematic and cluster/sector Evaluation: They center around specific subjects (e.g sex/climate) or clusters/sectors (e.g health sector) and evaluate the performance of various projects across those subjects/areas. These assessments have an enormous scope and are likely best left for donor governments and bigger NGOs.

- Real-time Evaluations: These are attempted during project/program execution to give immediate criticism to a work process to improve execution over time. Unlike impact evaluation, we are not learning the overall impact over time, but on learning on the go. These are especially valuable during emergency tasks (IFRC).

- Self-Evaluations or Self-Assessment: NGO staff survey programs/projects and submit reports to senior administration. It likewise can empower learning, and a feeling of responsibility for the assessment process among staff. NGO staff may likewise be better positioned to comprehend the intricacies of an undertaking or program than an outside evaluator. The only downside is that self‐assessments can be ineffective due to an internal bias, and an ability to overlook some things an external onlooker would spot. Your argument to your sponsors could be that this is a good way to begin evaluation, and that you don’t have the financial plan to embrace a full scale assessment.

- Joint Evaluations: Also called peer‐assessment includes staff from at least one friend association directing the assessment. This keeps costs somewhat low and assessments can be unbiased. The principal issue is that it requires a significant degree of trust between NGOs, so the surveyed association can be transparent during the assessment. For example, if there is a solid contest for funding, NGOs might be reluctant to let a potential opponent association investigate their activities, so that it doesn’t affect them in the long run.

- Evaluations accompagnées: In this case, NGOs are joined by an external evaluator, this is a decent way for NGOs and Volunteer Organizations to begin their journey with evaluation practice. This method can offer priceless experiences, and learning.

- Going through the scholarly works in the field of evaluation theory and practical techniques used. We want to discuss some assumptions that still hinder the usage of this approach, along with answers to correct them.

Normal Assumptions About M&E

- Assessments will be excessively expensive and checking will take excessively long.

- M&E will uncover imperfections in their project/program plan and will convey ‘some unacceptable’ or problematic outcomes.

- M&E requires excessive specialization and ought to be passed on to the specialists.

- It is smarter to work only in the ”present time and place”

Note that the above worries are not ridiculous:

- M&E requires resource

- M&E is difficult.

- NGOs are regularly hampered by staff deficiencies and the fast turnover of staff.

Note, nonetheless, that M&E is inside the limit and reach of more Small NGOs, volunteer associations and even volunteers.

For What Reasons Do We Really Want M&E?

The benefit of Monitoring and Evaluation (M&E) is currently broadly perceived in global improvement circles.

There are many motivations behind why NGOs should accept M&E, especially because a nonchalant mentality towards evaluation adversely affects the capacity of NGOs to learn, store, and give out operational insight.

Regardless of the many advantages of M&E, it can frequently be dismissed by NGOs , on the grounds that it is seen to be excessively difficult and expensive. Recall that evaluative doesn’t mean having to have a deep understanding of a program, but about identifying a few critical markers of accomplishment or disappointment.

M&E can be helpful to:

Beneficiaries

- M&E can assist you with showing that you are not causing damage even with your good intentions.

- It tends to be important for a participatory cycle that ensures you pay attention to the assessment of peers.

You

- It surveys how and if the project has accomplished its planned objectives, how/where it has not and how/where it very well may be improved.

- It allows you assess how reasonable and significant the venture was (or alternately was not) for members.

- It recognizes how effective the task is in converting assets (financial or not) into actual results.

Donor

- It can assist with showing proof of proper fund management for cash when the press and different bodies are examining/censuring contributor funding of NGOs.

- It can pave the way for future financing from public bodies.

Some points to recollect:

- Evaluation can be once time, toward the finish of a venture, or it very well may be a continuous review.

- In all cases, evaluation is a method for gaining from what has occurred, and utilize that information to settle on educated choices about the future regarding your work.

- Assessment isn’t just about showing achievement, it is likewise about realizing the reason why things don’t work.

Following this rationale, with the involvement and dedication of the whole team, it should help your organization advance by identifying weaknesses and turning them to strength.

External Links

https://thetoolkit.me/what-is-me/

https://tools4dev.org/resources/how-to-create-an-monitoring-and-evaluation-system/

https://www.sportanddev.org/en/toolkit/monitoring-and-evaluation/what-monitoring-and-evaluation-me

This a reliable and informative presentation

I have learned a lot from this presentation.

Very comprehensive and educative.

The Principles and Concepts of Monitoring and Evaluation summarized.

Very concise. I was a very clear and helpful brief.

Very concise. It was a very clear and helpful brief.